younes on X: "New release for PEFT library! 🔥 Do you know that you can now easily "merge" the LoRA adapter weights into the base model, and use the merged model as

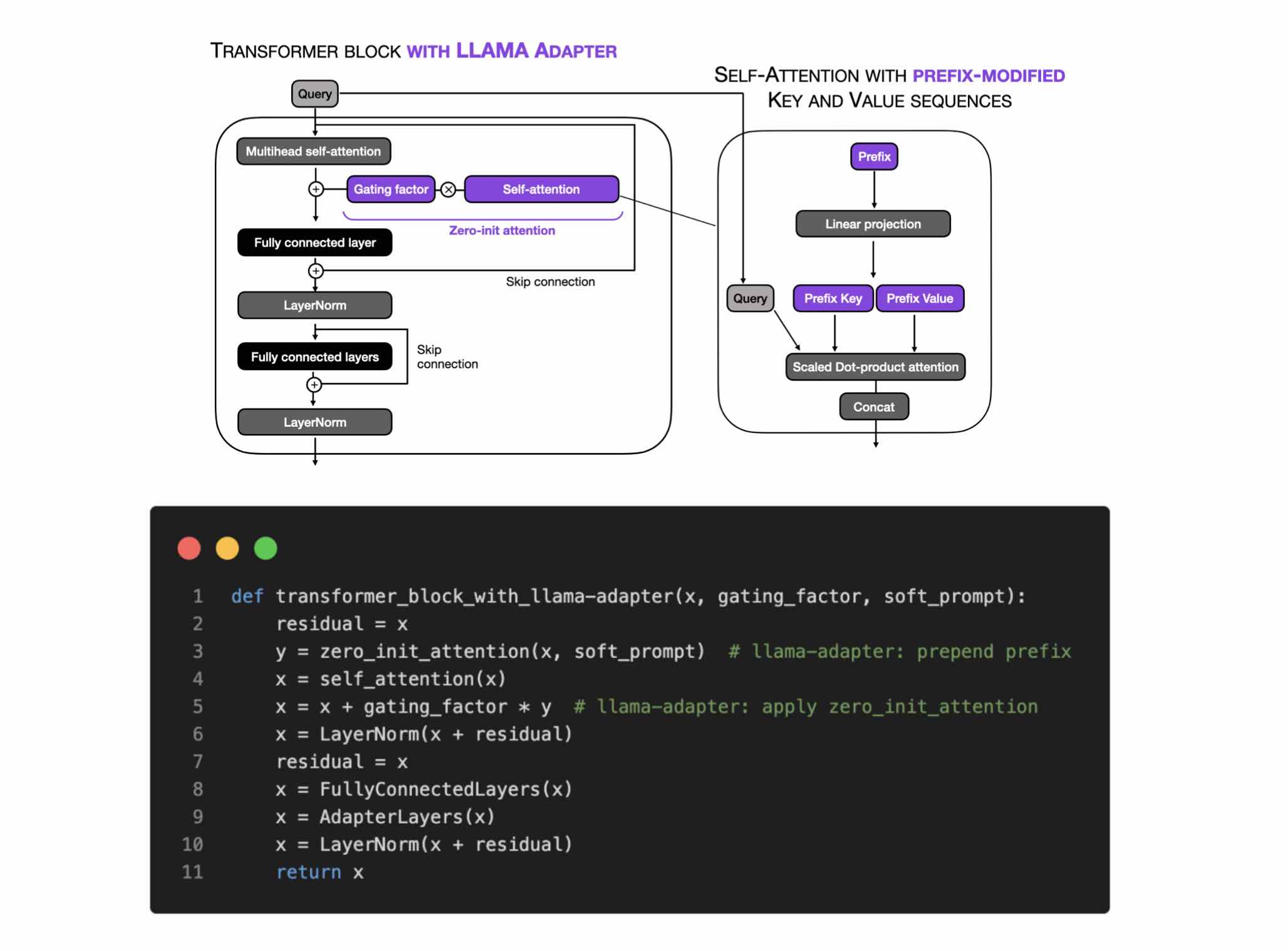

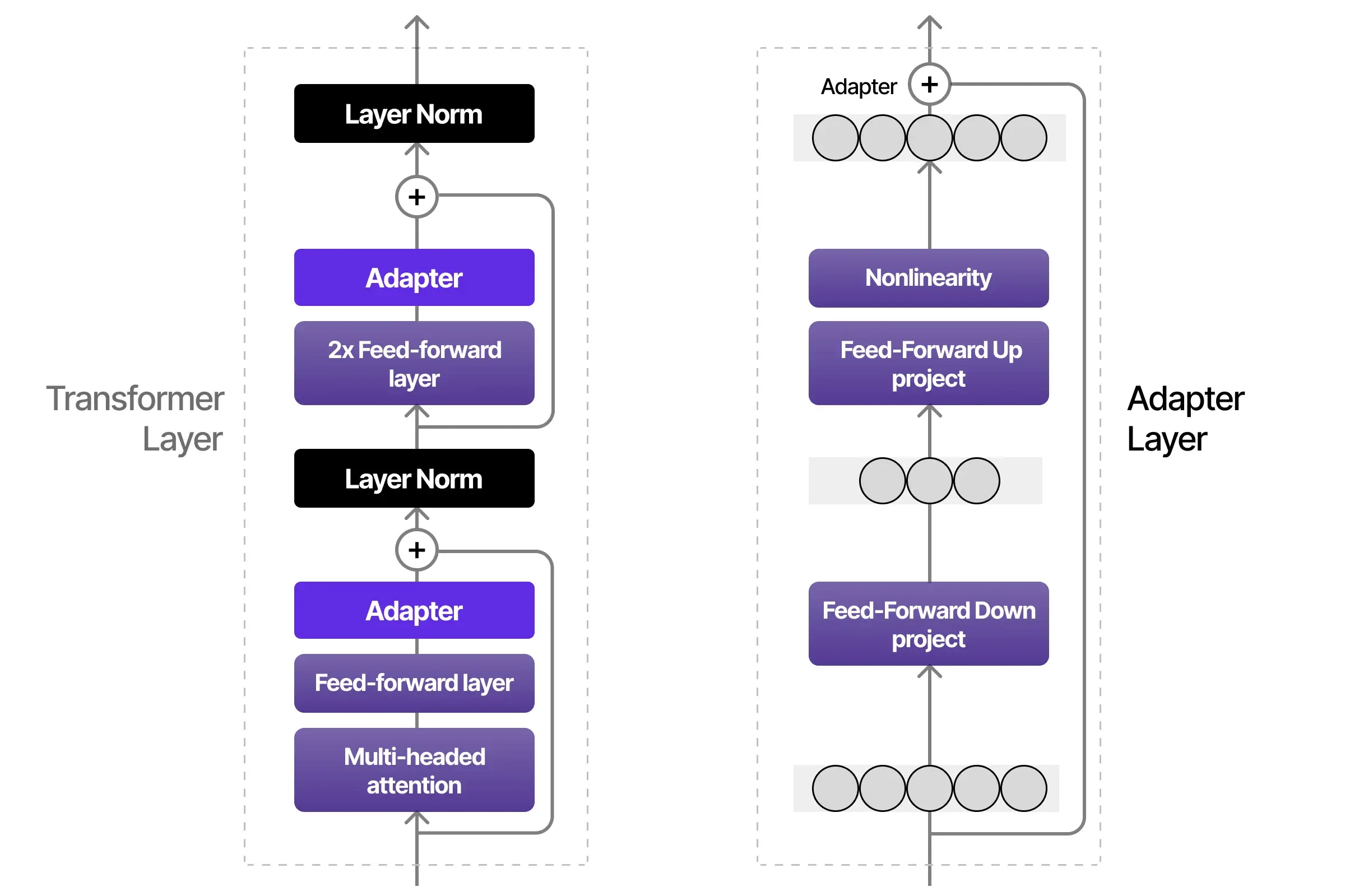

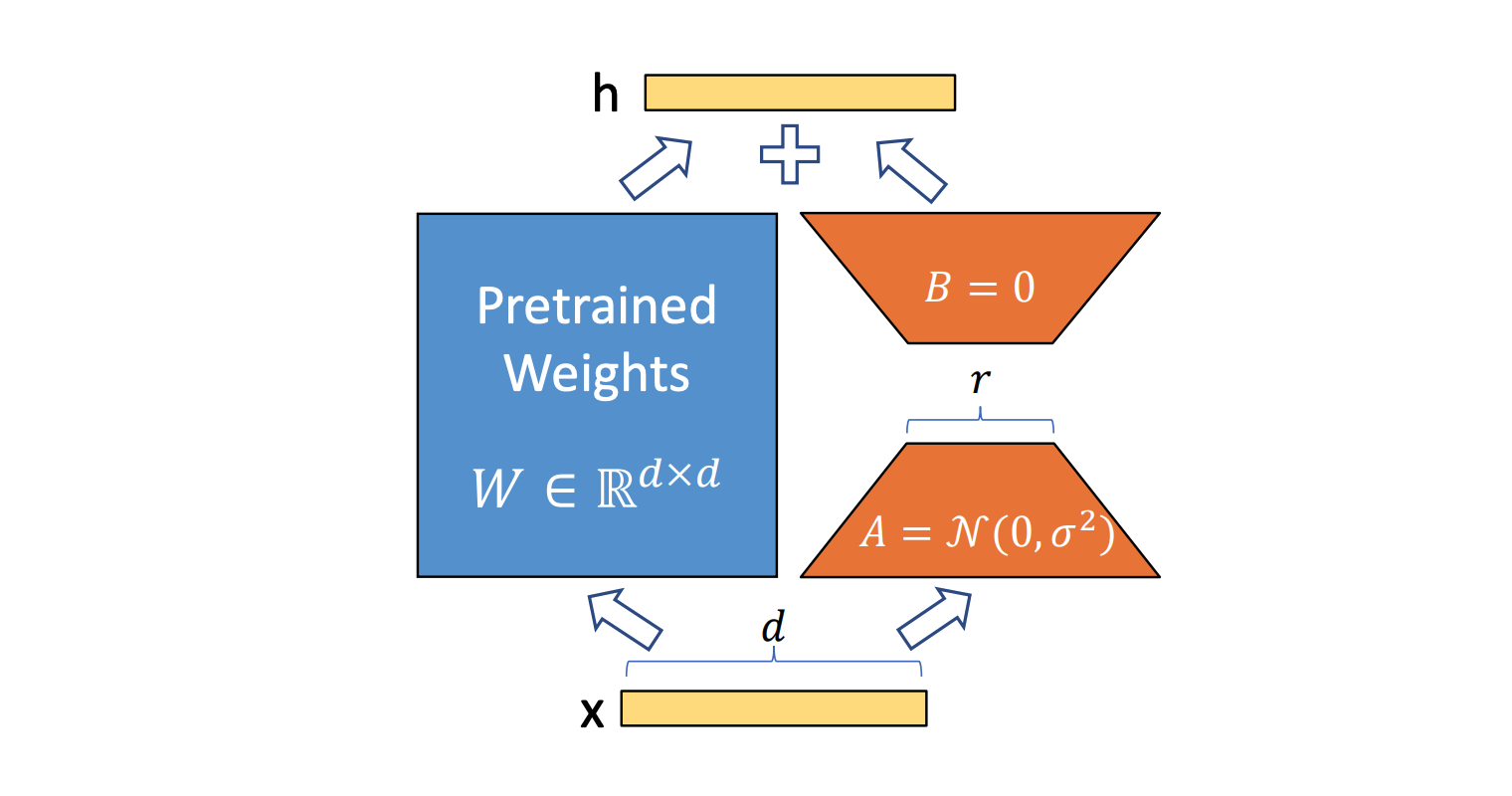

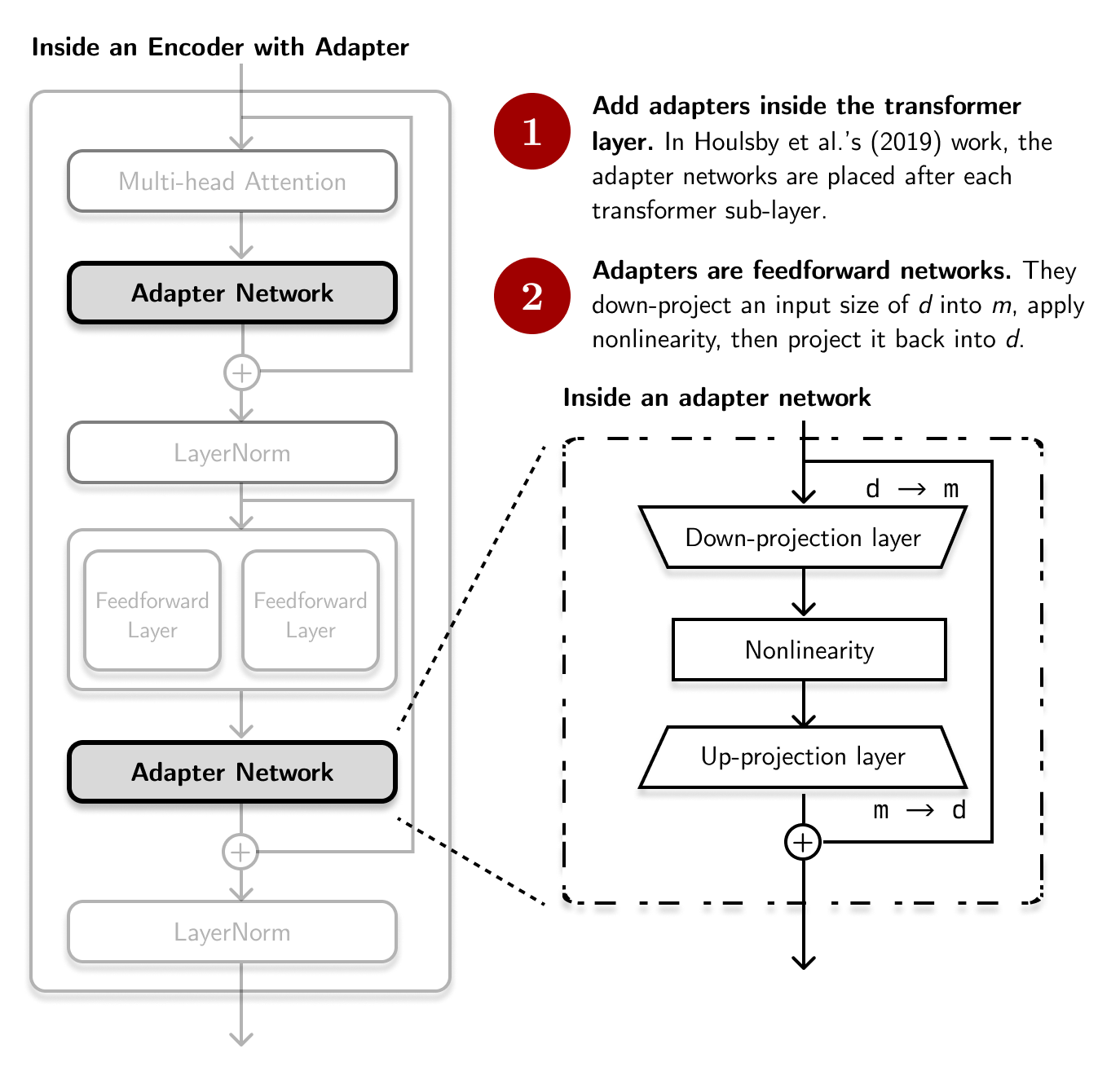

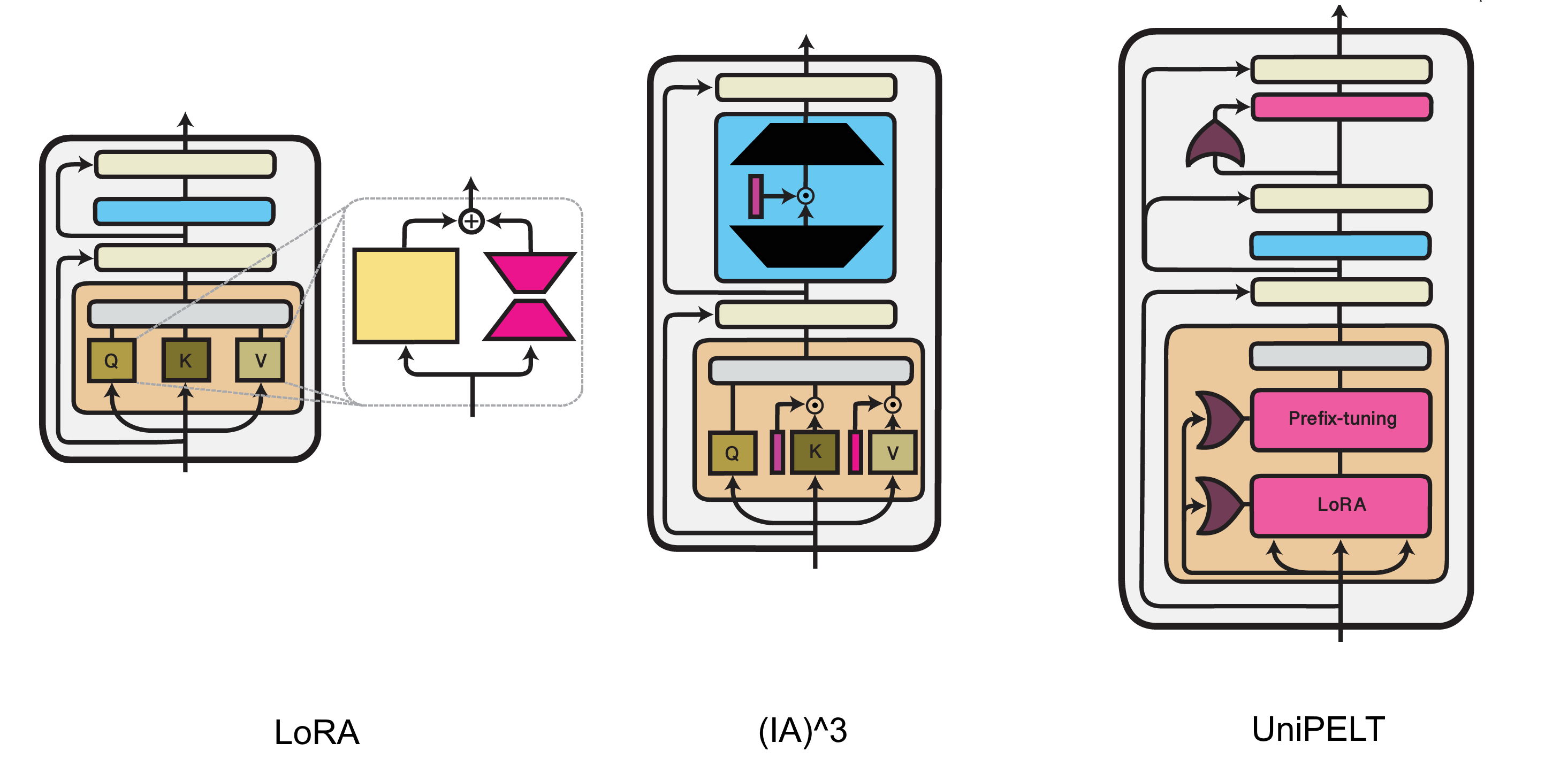

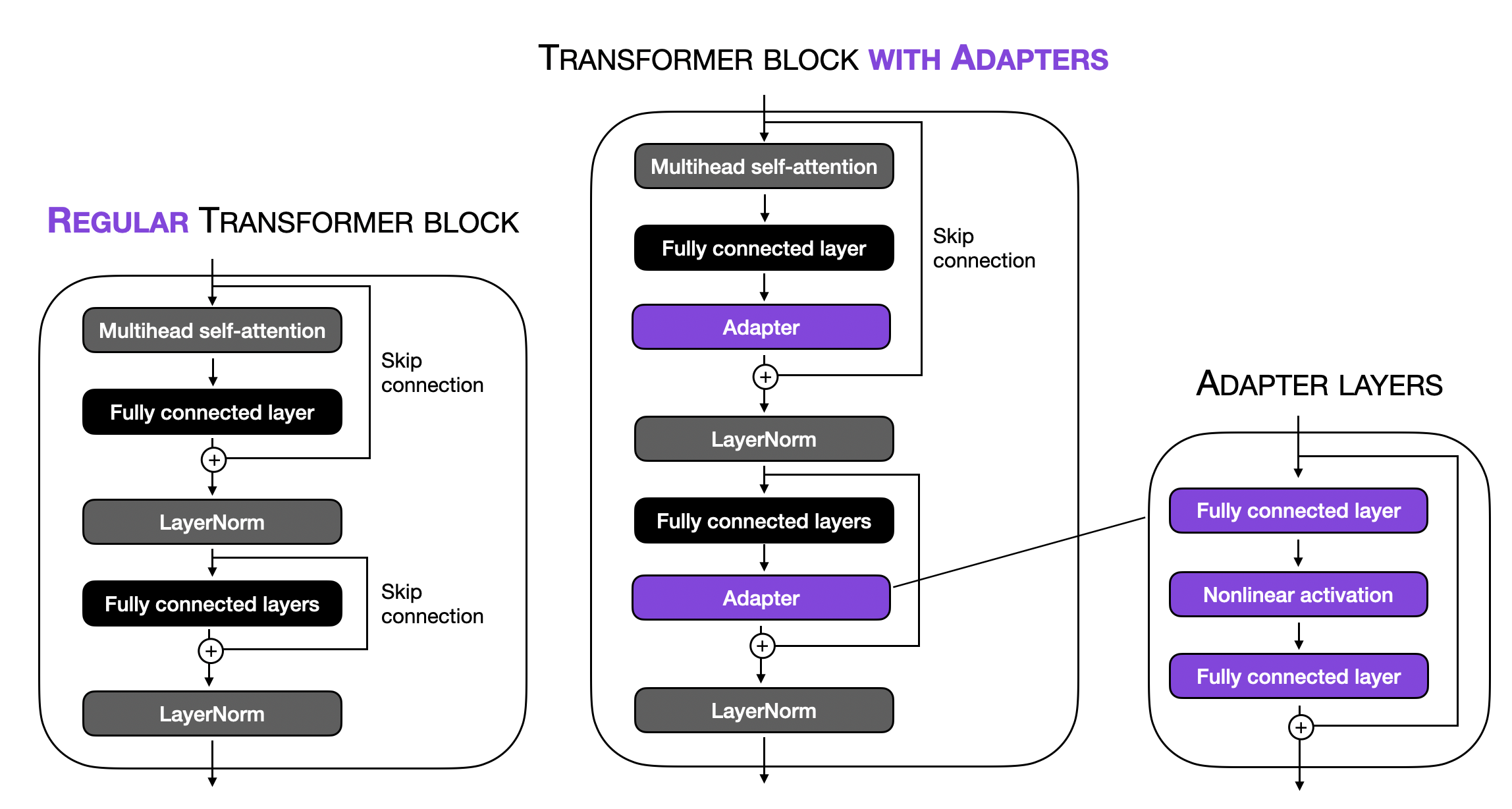

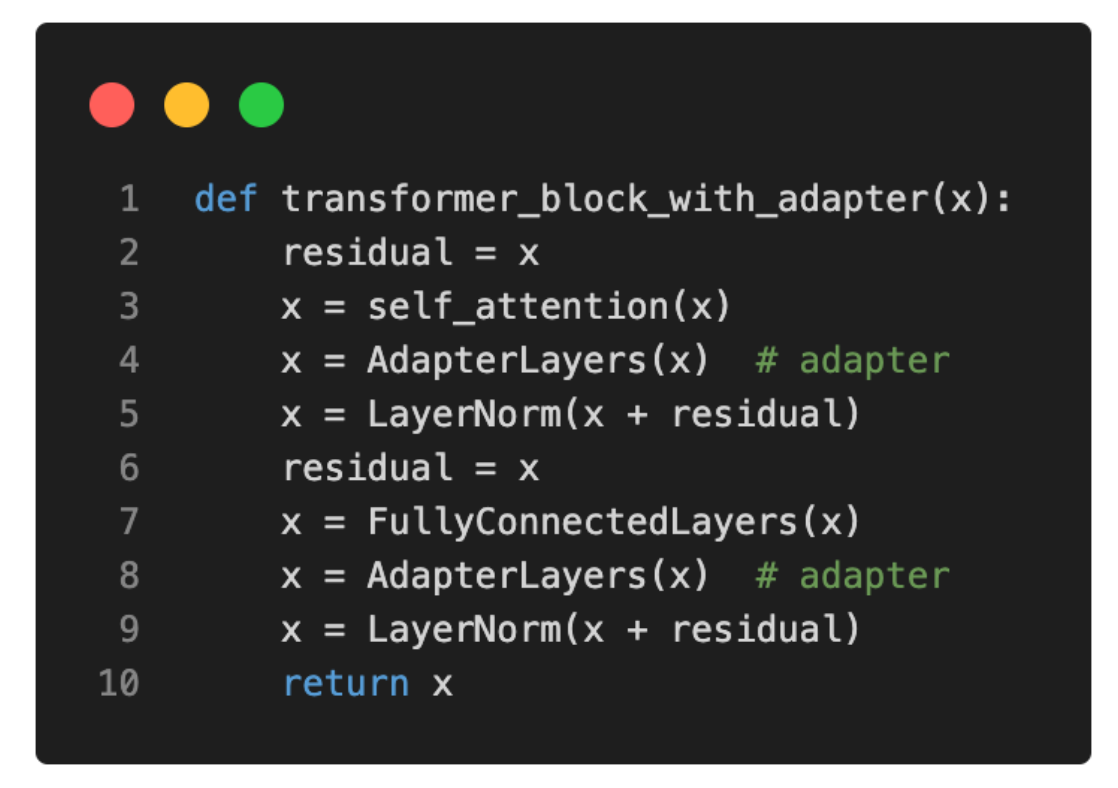

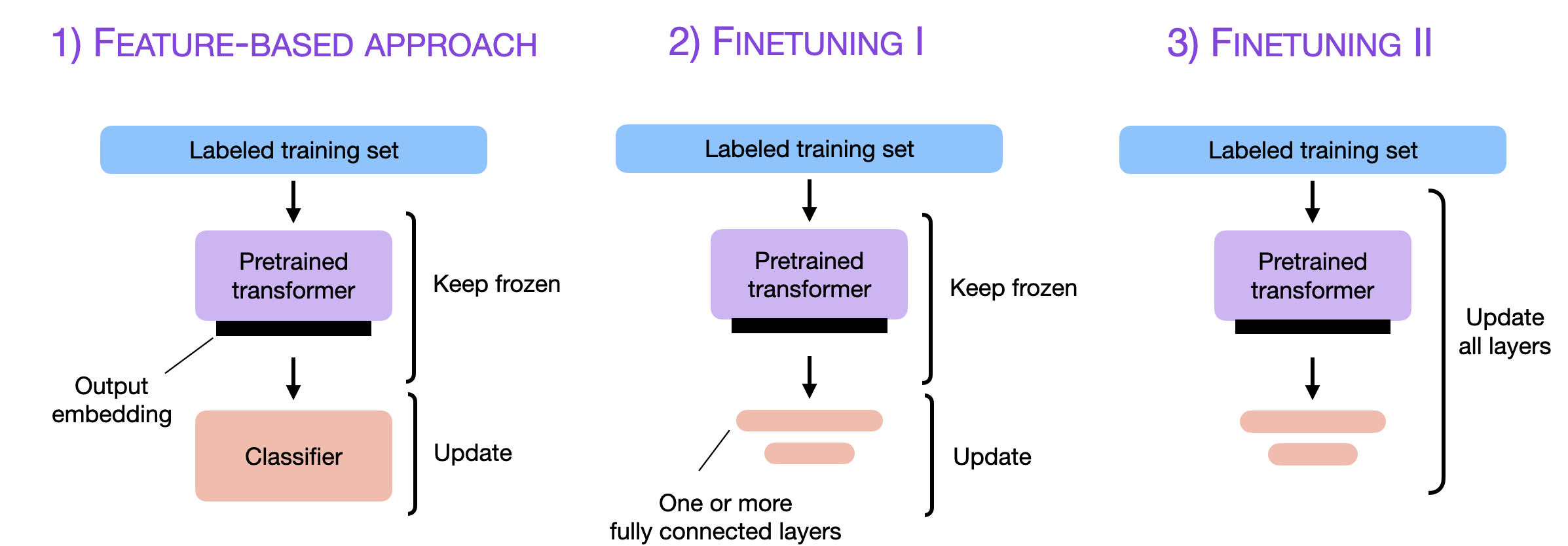

Understanding Parameter-Efficient Finetuning of Large Language Models: From Prefix Tuning to LLaMA-Adapters

Method to unload an adapter, to allow the memory to be freed · Issue #738 · huggingface/peft · GitHub

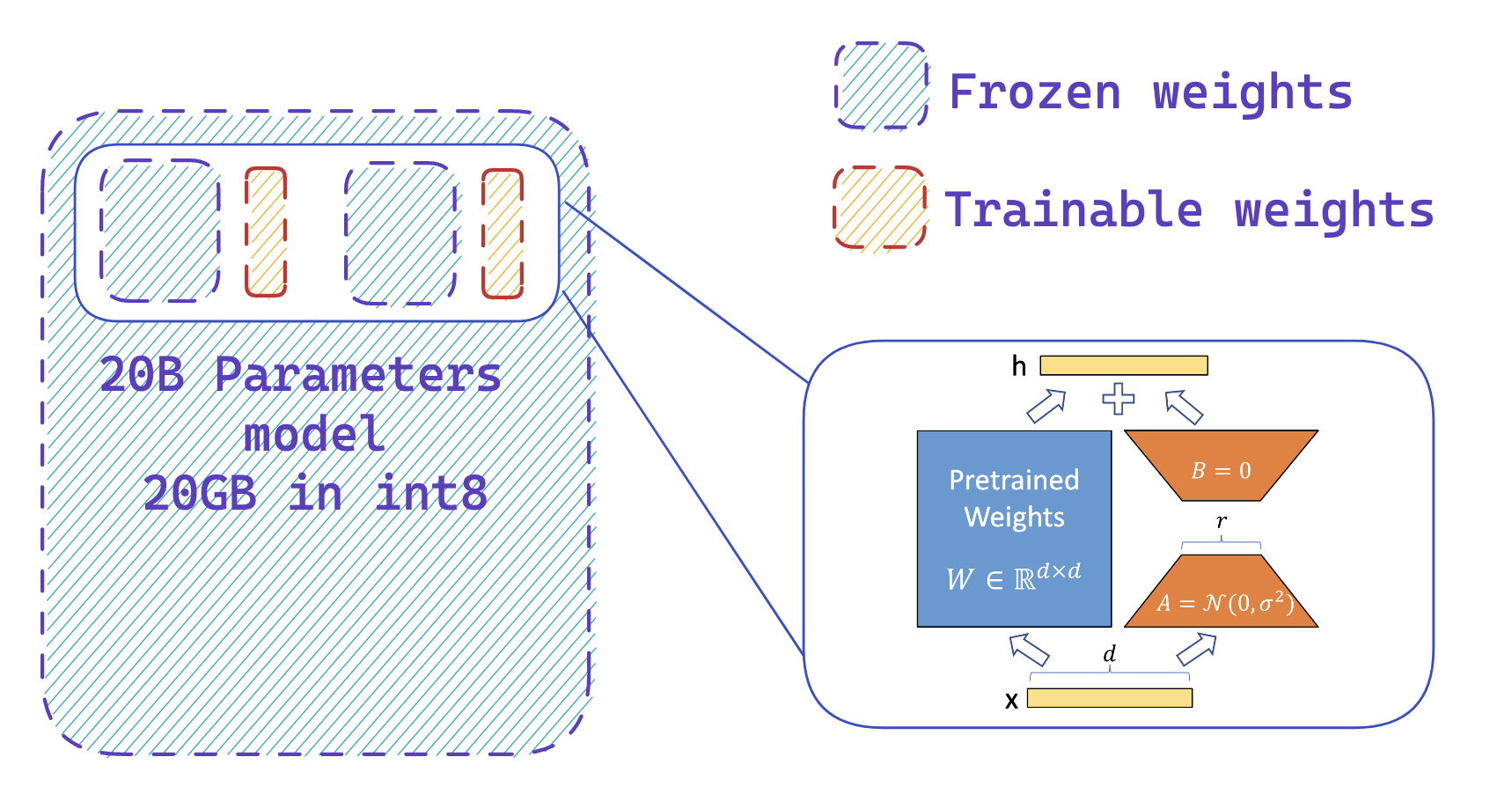

Summary Of Adapter Based Performance Efficient Fine Tuning (PEFT) Techniques For Large Language Models | smashinggradient

Understanding Parameter-Efficient Finetuning of Large Language Models: From Prefix Tuning to LLaMA-Adapters

Understanding Parameter-Efficient Finetuning of Large Language Models: From Prefix Tuning to LLaMA-Adapters

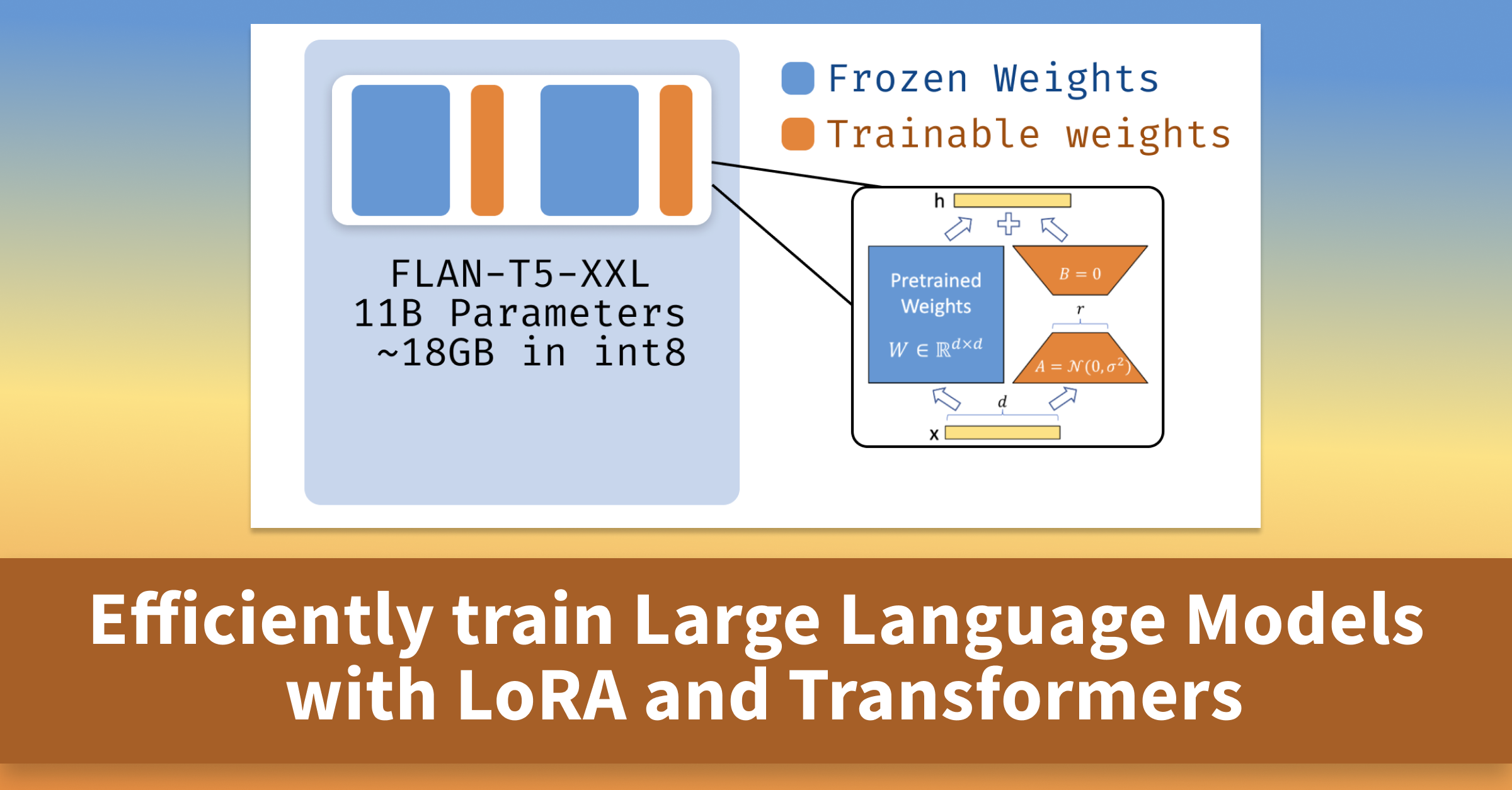

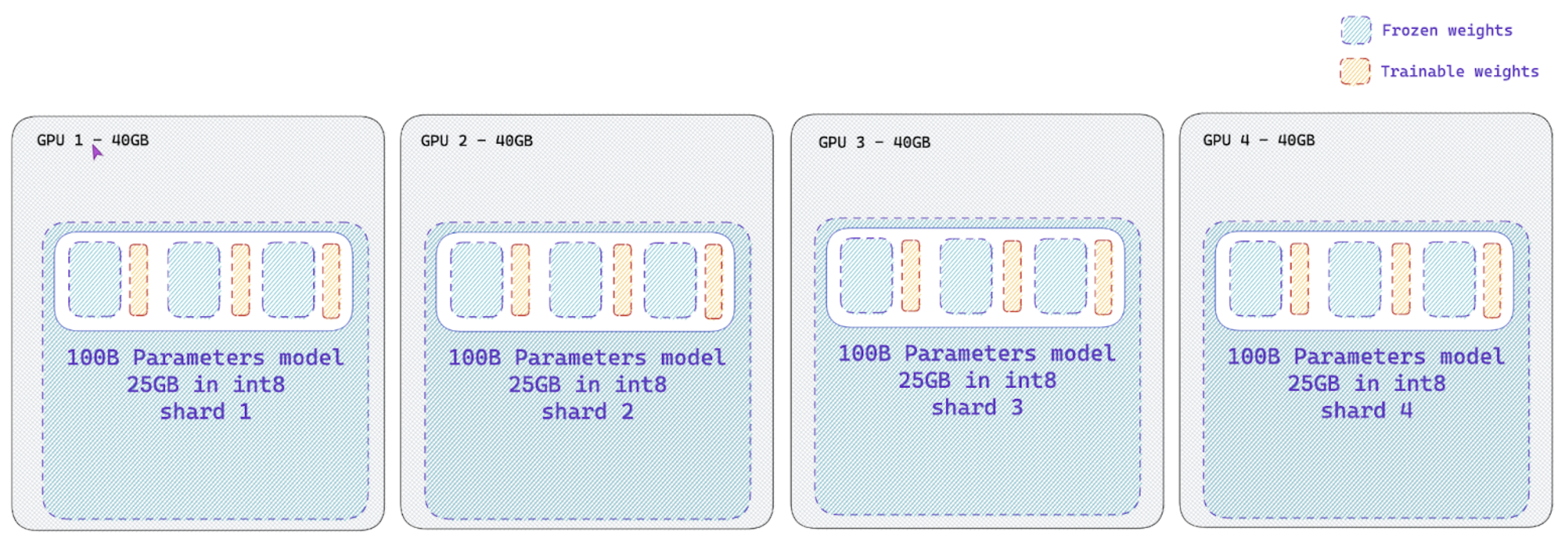

Fine Tuning LLM: Parameter Efficient Fine Tuning (PEFT) — LoRA & QLoRA — Part 1 | by A B Vijay Kumar | Medium